Zeynep Bilgili, MD & Gabriel Brat, MD MPH MSc

August 13, 2025

When Sam Altman showcased the abilities of GPT-5 for healthcare, we thought: let’s see what the hype is about. We ran it through seven high-stakes acute care surgery scenarios that included a series of questions from patients to their ‘surgeon.’ We had already tested these scenarios on GPT-4 for an upcoming publication, with cases on emergency surgery, low health literacy, tech distrust, obesity concerns, and financial barriers.

The goal: see if GPT-5 handled the interaction better, worse, or just… differently.

The “Overthinking”

One of the first things to notice was that GPT-5 often thinks longer. In a clinical setting, especially acute care surgery, more thinking isn’t always better let alone possible. We started calling this the “overthinking” factor. And it is more obvious in the follow up questions of the conversation.

Quick quantitative check showed:

Word count: GPT-4 averaged ~69 words per answer, GPT-5 ~69 as well but the distribution was different. GPT-5 often had longer, more loaded first answers in the conversation.

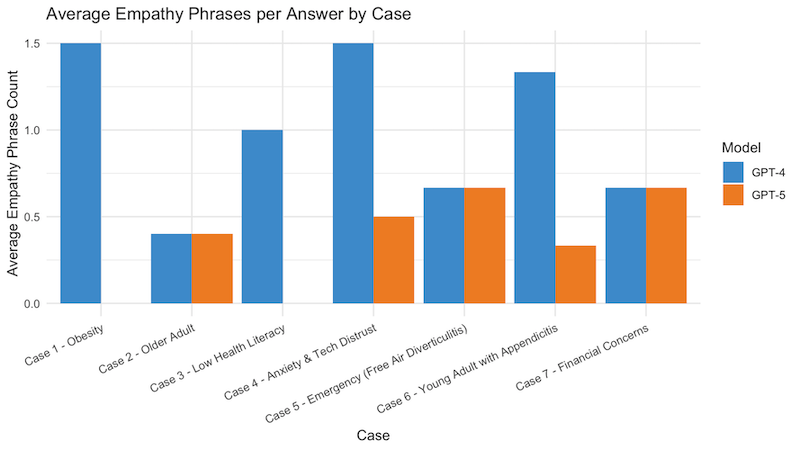

Empathy words: GPT-4 scored more than double GPT-5 on empathy markers (“I understand your concern”, “I want you to feel confident”). GPT-5, while warmer in some spots, leaned more toward confident, directive language.

Figure 1: Average empathy phrases per answer across seven acute care surgery scenarios.

The Confidence

Patient: “I’ve heard on TV about the dangers of mesh. Will you use mesh?”

GPT-4: Explained that mesh might or might not be necessary depending on the case, promised to explain risks and benefits, and invited more discussion.

GPT-5: “I wouldn’t use mesh unless absolutely necessary.” Definitive, confident… and in this case, wrong for many real surgeons’ practices.

High body weight: When Detail Works Well

On the flip side, GPT-5 shines when it gets procedure-specific. Take this case: a woman worried her higher body weight would make her surgery riskier. GPT-5 added concrete details like adjusting port placement, using longer instruments and specifics that could make a patient feel the surgeon is prepared. GPT-4 gave a good answer, but less color and detail.

Anxiety & Tech Distrust: A Tie

In the “I don’t trust machines” case, GPT-4 leaned empathetic, emphasizing “I’m in control, not the robot.” While also addressing this, GPT-5 layered in the technology’s safety record and precision.

GPT-4: “From a patient perspective, if something goes wrong, I am still in the operating room directing the instruments… I have full control over how they’re being used.”

GPT-5: “The robot is a tool I control at every moment. It’s been used for over 20 years with strong safety records and allows me greater precision and access.”

The difference was in the tone: GPT-4 felt like a calming friend; GPT-5 felt like a TED talk.

Financial Concerns: The Quick Answer vs. The Breakdown

When our simulated patient asked if robotic surgery would cost them more, GPT-4 gave a straight-to-the-point reassurance, while GPT-5 unpacked the reasoning behind it.

GPT-4: “You’ll pay about the same as other minimally invasive options.”

GPT-5: “Hospitals don’t typically charge patients more for robotic surgery… the extra cost is on the hospital side, but your bill should be comparable.”

GPT-5's response was actually pretty close to what a board certified surgeon would say, as a reference.

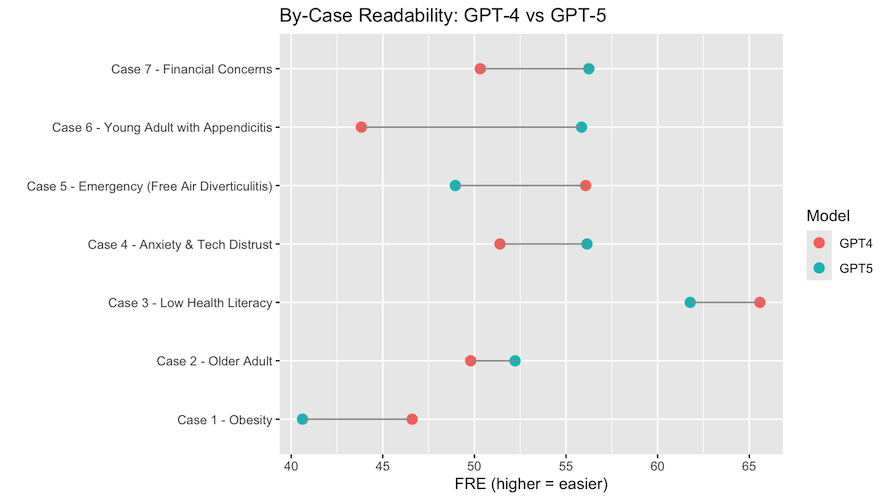

Low Health Literacy: Plain Talk vs. Surgical Speak

Faced with a simulated patient who might struggle to understand medical jargon, GPT-4 kept the explanation simple and concrete, while GPT-5 introduced more formal, technical language. It is important to note that I did not give a prompt that explains the patient and their education level, so the model needed to figure this out from the questions themselves.

GPT-4: “We’ll make a few small cuts in your belly to fix the problem. You’ll be asleep and won’t feel pain.”

GPT-5: “We’ll make a series of minimally invasive incisions for optimal access and visualization, ensuring safe removal of the problem area.”

Figure 2: By-case readability (Flesch Reading Ease) for GPT-4 vs GPT-5 across seven acute care surgery scenarios.

What GPT-5 Thinks of Itself

For fun, we asked GPT-5 to compare its own answers to GPT-4’s. Well, we mentioned in the prompt that these answers are coming from two different sources, to blind it in a way. GPT-5 said 5 was better, citing richer detail, more actionable explanations, and a stronger balance of honesty and optimism. I agree with that, GPT-5 provided more procedure-specific and developed answers. In the world of surgery, confidence needs to be backed up with accuracy and reassurance .

The Takeaway

After this little, very unquantified experiment, GPT-5 feels like it’s just been given a brand-new, razor-sharp scalpel. It’s proud of it, eager to show it off, and sometimes a bit too sure of itself. The sharpest tool in the room still depends on the judgment and touch of the person holding it. This mini-experiment serves more as a reminder: this tool was not built specifically for healthcare, yet it can often deliver strong, reassuring answers and is getting better everyday. The constant developments and momentum in the world of large language models is exciting for us that are building better tools for our surgical patients. AI can bring precision and efficiency to surgery, and for conversational agents, successful patient interaction depends on pairing those strengths with the right dose of judgment and empathy.